Jumat, 27 Mei 2016

Selasa, 24 Mei 2016

A matter of convergence: building digital instrument clusters with Qt on QNX

|

| Tuukka Turunen |

The Qt application framework is widely used in automotive infotainment systems with a variety of operating system and hardware configurations. With digital instrument clusters becoming increasingly common in new models, there are significant synergies to be gained from using the same technologies for both the infotainment system and the cluster. To be able to do this, you need to choose technologies, such as Qt and QNX, that can easily address the requirements of both environments.

Qt is the leading cross-platform technology for the creation of applications and user interfaces for desktop, mobile, and embedded systems. Based on C++, the Qt framework provides fast native performance via a versatile and efficient API. It’s easy to create modern, hardware-accelerated user interfaces using Qt Quick user interface technology and its QML language. Qt comes with an integrated development environment (IDE) tailored for developing applications and embedded devices. Leveraging the QNX Neutrino Realtime OS to run Qt provides significant advantages for addressing the requirements of functional safety.

There is a strong trend in the automotive industry to create instrument clusters using digital graphics rather than traditional electromechanical and analog gauges. Unlike the first digital clusters in the 70s, which used 7-segment displays to indicate speed, today’s clusters typically show a digital representation of the analog speedometer along with an array of other information, such as RPM, navigation, vehicle information, and infotainment content. The benefits compared to analog gauges are obvious; for example, it is possible to adapt the displayed items according to the driver’s needs in different situations, or easily create regional variants, or adapt the style of the instrument cluster to the car model and user’s preferences.

A unified experience — for both developers and users

Traditionally, the speedometer and radio have been two very different systems, but today their development paths are converging. Convergence will drive the need for consistency as otherwise the user experience will be fragmented. To meet the needs of tomorrow’s vehicles, it is essential that the two screens are aware of each other and interoperate. It is also likely that, while these are converging, certain items will remain specific to each domain. Furthermore, the convergence will help accelerate time-to-market for car manufacturers by offering simplified system design and faster development cycles.

Qt, which is already widely used in state-of-the-art in-vehicle infotainment systems and many other complex systems, is an excellent technology to unify the creation of these converging systems. By leveraging the same versatile Qt framework and tools for both the cluster and the infotainment system, it is possible to achieve synergies in the engineering work as well as in the resulting application. With the rich graphics capabilities of Qt, creating attractive user interfaces for a unified experience across all screens of the vehicle cockpit becomes a reality.

Cluster demonstrator built with Qt 5.6.

Maximal efficiency

Qt has been used very successfully in QNX-based automotive and general embedded systems for a long time. To show how well Qt 5.6 and our latest Qt based cluster demonstrator run on top of the QNX OS, which is pre-certified to ISO 26262 ASIL D, we brought them together on NXP’s widely used i.MX 6 processor. As the cluster HMI is made with Qt, it runs on any platform supported by Qt, including the QNX OS, without having to be rewritten.

The cluster demonstrator leverages Qt Quick for most of the cluster and Qt 3D for the car model. The application logic is written in C++ for maximal efficiency. By using the Qt Quick Compiler, the QML parts run as efficiently as if they too were written in C++, speeding up the startup time by removing the run-time compilation step.

The following video presents the cluster demonstrator running on the QNX OS and the QNX Screen windowing system:

The QNX OS for Safety has been certified to both IEC 61508 SIL 3 and ISO 26262 ASIL D, so it provides a smooth and straightforward path for addressing the functional safety certification of an automotive instrument cluster.

Qt 5.6 has been built for the QNX OS using the GCC toolchain provided by QNX Software Systems. The display of the cluster is a 12.3" HSXGA (1280×480) screen and the CPU is NXP’s i.MX 6 processor, which is well-suited to automotive instrument clusters.

Our research and development efforts continue with a goal to make it straightforward to build sophisticated digital instrument clusters with Qt. We believe that Qt is the best choice for building infotainment systems and clusters, but that it is particularly beneficial when used in both of these. Please contact us to discuss how Qt can be used in automotive, as well as in other industries, or to evaluate the latest Qt version on the QNX platform.

Visit qt.io for more information on Qt.

About Tuukka

Tuukka Turunen leads R&D at The Qt Company. He holds a Master’s of Science in Engineering and a Licentiate of Technology from the University of Oulu, Finland. He has over 20 years of experience working in a variety of positions in the software industry, especially around connected embedded systems.

Kamis, 28 April 2016

When the rubber ducky hits the road

|

| Paul Leroux |

Kidding aside, CSAIL has a launched a graduate course on the science of autonomy. This spring, students were tasked to create a fleet of miniature robo-taxis that could autonomously navigate roads using a single on-board camera and no pre-programmed maps. Here is the (impressive) result:

The course looks like fun (and I’m sure it is), but in the process, students learn how to integrate multiple disciplines, including control theory, machine learning, and computer vision. Which, to my mind, is just ducky. :-)

Kamis, 21 April 2016

Autonomous cars that can navigate winter roads? ‘Snow problem!

A look at what happens when you equip a Ford Fusion with sensor fusion.

Let’s face it, cars and snow don’t mix. A heavy snowfall can tax the abilities of even the best driver — not to mention the best automated driving algorithm. As I discussed a few months ago, snow can mask lane markers, obscure street signs, and block light-detection sensors, making it difficult for an autonomous car to determine where it should go and what it should do. Snow can even trick the car into “seeing” phantom objects.

Automakers, of course, are working on the problem. Case in point: Ford’s autonomous research vehicles. These experimental Ford Fusion sedans create 3D maps of roads and surrounding infrastructure when the weather is good and visibility clear. They then use the maps to position themselves when the road subsequently disappears under a blanket of the white stuff.

How accurate are the maps? According to Ford, the vehicles can position themselves to within a centimeter of their actual location. Compare that to GPS, which is accurate to about 10 yards (9 meters).

To create the maps, the cars use LiDAR scanners. These devices collect a ginormous volume of data about the road and surrounding landmarks, including signs, buildings, and trees. Did I say ginormous? Sorry, I meant gimongous: 600 gigabytes per hour. The scanners generate so many laser points — 2.8 million per second — that some can bounce off falling snowflakes or raindrops, creating the false impression that an object is in the way. To eliminate these false positives, Ford worked with U of Michigan researchers to create an algorithm that filters out snow and rain.

The cars don’t rely solely on LiDAR. They also use cameras and radar, and blend the data from all three sensor types in a process known as sensor fusion. This “fused” approach compensates for the shortcomings of any particular sensor technology, allowing the car to interpret its environment with greater certainty. (To learn more about sensor fusion for autonomous cars, check out this recent EE Times Automotive article from Hannes Estl of TI.)

Ford claims to be the first automaker to demonstrate robot cars driving in the snow. But it certainly won’t be the last. To gain worldwide acceptance, robot cars will have to prove themselves on winter roads, so we are sure to see more innovation on this (cold) front. ;-)

In the meantime, dim the lights and watch this short video of Ford’s “snowtonomy” technology:

Did you know? In January, QNX announced a new software platform for ADAS and automated driving systems, including sensor fusion solutions that combine data from multiple sources such as cameras and radar processors. Learn more about the platform here and here.

|

| Paul Leroux |

Automakers, of course, are working on the problem. Case in point: Ford’s autonomous research vehicles. These experimental Ford Fusion sedans create 3D maps of roads and surrounding infrastructure when the weather is good and visibility clear. They then use the maps to position themselves when the road subsequently disappears under a blanket of the white stuff.

How accurate are the maps? According to Ford, the vehicles can position themselves to within a centimeter of their actual location. Compare that to GPS, which is accurate to about 10 yards (9 meters).

To create the maps, the cars use LiDAR scanners. These devices collect a ginormous volume of data about the road and surrounding landmarks, including signs, buildings, and trees. Did I say ginormous? Sorry, I meant gimongous: 600 gigabytes per hour. The scanners generate so many laser points — 2.8 million per second — that some can bounce off falling snowflakes or raindrops, creating the false impression that an object is in the way. To eliminate these false positives, Ford worked with U of Michigan researchers to create an algorithm that filters out snow and rain.

The cars don’t rely solely on LiDAR. They also use cameras and radar, and blend the data from all three sensor types in a process known as sensor fusion. This “fused” approach compensates for the shortcomings of any particular sensor technology, allowing the car to interpret its environment with greater certainty. (To learn more about sensor fusion for autonomous cars, check out this recent EE Times Automotive article from Hannes Estl of TI.)

Ford claims to be the first automaker to demonstrate robot cars driving in the snow. But it certainly won’t be the last. To gain worldwide acceptance, robot cars will have to prove themselves on winter roads, so we are sure to see more innovation on this (cold) front. ;-)

In the meantime, dim the lights and watch this short video of Ford’s “snowtonomy” technology:

Did you know? In January, QNX announced a new software platform for ADAS and automated driving systems, including sensor fusion solutions that combine data from multiple sources such as cameras and radar processors. Learn more about the platform here and here.

Selasa, 15 Maret 2016

Goodbye analog, hello digital

Since 2008, QNX has explored how digital instrument clusters will change the driving experience.

Quick: What do the Alfa Romeo 4C, Audi TT, Audi Q7, Corvette Stingray, Jaguar XJ, Land Rover Range Rover, and Mercedes S Class Coupe have in common?

Answer: They would all look awesome in my driveway! But seriously, they all have digital instrument clusters powered by the QNX Neutrino OS.

QNX Software Systems has established a massive beachhead in automotive infotainment and telematics, with deployments in over 60 million cars. But it’s also moving into other growth areas of the car, including advanced driver assistance systems (ADAS), multi-function displays, and, of course, digital instrument clusters.

The term “digital cluster” means different things to different people. To boomers like myself, it can conjure up memories of 1980s dashboards equipped with less-than-sexy segment displays — just the thing if you want your dash to look like a calculator. Thankfully, digital clusters have come a long way. Take, for example, the slick, high-resolution cluster in the Audi TT. Designed to display everything directly in front of the driver, this QNX-powered system integrates navigation and infotainment information with traditional cluster readouts, such as speed and RPM. It’s so advanced that the folks at Audi don’t even call it a cluster — they call it virtual cockpit, instead.

Now here’s the thing: digital clusters require higher-end CPUs and more software than their analog predecessors, not to mention large LCD panels. So why are automakers adopting them? Several reasons come to mind:

2008: The first QNX cluster

It’s no coincidence that so many automakers are using the QNX Neutrino OS in their digital clusters. For years now, QNX Software Systems has been exploring how digital clusters can enhance the driving experience and developing technologies to address the requirements of cluster developers.

Let’s start with the very first digital cluster that the QNX team created, a proof-of-concept that debuted in 2008. Despite its vintage, this cluster has several things in common with our more recent clusters — note, for example, the integrated turn-by-turn navigation instructions:

For 2008, this was pretty cool. But as an early proof-of-concept, it lacked some niceties, such as visual cues that could suggest which information is, or isn’t, currently important. For instance, in this screenshot, the gauges for fuel level, engine temperature, and oil pressure all indicate normal operation, so they don’t need to be so prominent. They could, instead, be shrunk or dimmed until they need to alert the driver to a critical change — and indeed, we explored such ideas soon after we created the original design. As you’ll see, the ability to prioritize information for the driver becomes quite sophisticated in subsequent generations of our concept clusters.

Did you know? To create this 2008 cluster, QNX engineers used Adobe Flash Lite 3 and OpenGL ES.

2010: Concept cluster in a Chevrolet Corvette

Next up is the digital cluster in the first QNX technology concept car, based on a Chevrolet Corvette. If the cluster design looks familiar, it should: it’s modeled after the analog cluster that shipped in the 2010-era ‘Vettes. It’s a great example of how a digital instrument cluster can deliver state-of-the-art features, yet still honor the look-and-feel of an established brand. For example, here is the cluster in “standard” mode, showing a tachometer, just as it would in a stock Corvette:

And here it is again, but with something that you definitely wouldn’t find in a 2010 Corvette cluster — an integrated navigation app:

Did you know? The Corvette is the only QNX technology concept car that I ever got to drive.

2013: Concept cluster in a Bentley Continental GT

Next up is the digital cluster for the 2013 QNX technology concept car, based on a Bentley Continental GT. This cluster took the philosophy embodied in the Corvette cluster — honor the brand, but deliver forward-looking features — to the next level.

Are you familiar with the term Trompe-l’œil? It’s a French expression that means “deceive the eye” and it refers to art techniques that make 2D objects appear as if they are 3D objects. It’s a perfect description of the gorgeously realistic virtual gauges we created for the Bentley cluster:

Because it was digital, this cluster could morph itself on the fly. For instance, if you put the Bentley in Drive, the cluster would display a tach, gas gauge, temperature gauge, and turn-by-turn directions — the cluster pulled these directions from the head unit’s navigation system. And if you threw the car into Reverse, the cluster would display a video feed from the car’s backup camera. The cluster also had other tricks up its digital sleeve, such as displaying information from the car’s media player.

Did you know? The Bentley came equipped with a 616 hp W12 engine that could do 0-60 mph in a little over 4 seconds. Which may explain why they never let me drive it.

2014: Concept cluster in a Mercedes CLA45 AMG

Up next is the 2014 QNX technology concept car, based on Mercedes CLA45 AMG. But before we look at its cluster, let me tell you about the Plymouth safety speedometer. Designed to curb speeding, it alerted the driver whenever he or she leaned too hard on the gas.

But here’s the thing: the speedometer made its debut in 1939. And given the limitations of 1939 technology, the speedometer couldn’t take driving conditions or the local speed limit into account. So it always displayed the same warnings at the same speeds, no matter what the speed limit.

Connectivity to the rescue! Some modern navigation systems include information on local speed limits. By connecting the CLA45’s concept cluster to the navigation system in the car’s head unit, the QNX team was able to pull this information and display it in real time on the cluster, creating a modern equivalent of Plymouth's 1939 invention.

Look at the image below. You’ll see the local speed limit surrounded by a red circle, alerting the driver that they are breaking the limit. The cluster could also pull other information from the head unit, including turn-by-turn directions, trip information, album art, and other content normally relegated to the center display:

Did you know? Our Mercedes concept car is still alive and well in Germany, and recently made an appearance at the Embedded World conference in Nuremburg.

2015: Concept cluster in a Maserati Quattroporte

Up next is the 2015 QNX technology concept car, based on a Maserati Quattroporte GTS. Like the cluster in the Mercedes, this concept cluster provided speed alerts. But it could also recommend an appropriate speed for upcoming curves and warn of obstacles on the road ahead. It even provided intelligent parking assist to help you back into tight spaces.

Here is the cluster displaying a speed alert:

And here it is again, using input from a LiDAR system to issue a forward collision warning:

Did you know? Engadget selected the “digital mirrors” we created for the Maserati as a finalist for the Best of CES Awards 2015.

2015 and 2016: Concept clusters in QNX reference vehicle

The QNX reference vehicle, based on a Jeep Wrangler, is our go-to vehicle for showcasing the latest capabilities of the QNX CAR Platform for Infotainment. But it also does double-duty as a technology concept vehicle. For instance, in early 2015, we equipped the Jeep with a concept cluster that provides lane departure warnings, collision detection, and curve speed warnings. For instance, in this image, the cluster is recommending that you reduce speed to safely navigate an upcoming curve:

Just in time for CES 2016, the Jeep cluster got another makeover that added crosswalk notifications to the mix:

Did you know? Jeep recently unveiled the Trailcat, a concept Wrangler outfitted with a 707HP Dodge Hellcat engine.

2016: Glass cockpit in a Toyota Highlander

By now, you can see how advances in sensors, navigation databases, and other technologies enable us to integrate more information into a digital instrument cluster, all to keep the driver aware of important events in and around the vehicle. In our 2016 technology concept vehicle, we took the next step and explored what would happen if we did away with an infotainment system altogether and integrated everything — speed, RPM, ADAS alerts, 3D navigation, media control and playback, incoming phone calls, etc. — into a single cluster display.

By now, you can see how advances in sensors, navigation databases, and other technologies enable us to integrate more information into a digital instrument cluster, all to keep the driver aware of important events in and around the vehicle. In our 2016 technology concept vehicle, we took the next step and explored what would happen if we did away with an infotainment system altogether and integrated everything — speed, RPM, ADAS alerts, 3D navigation, media control and playback, incoming phone calls, etc. — into a single cluster display.

On the one hand, this approach presented a challenge, because, well… we would be integrating everything into a single display! Things could get busy, fast. On the other hand, this approach presents everything of importance directly in front of the driver, where it is easiest to see. No more glancing over at a centrally mounted head unit.

Simplicity was the watchword. We had to keep distraction to a minimum, and to do that, we focused on two principles: 1) display only the information that the driver currently requires; and 2) use natural language processing as the primary way to control the user interface. That way, drivers can access infotainment content while keeping their hands on the wheel and eyes on the road.

For instance, in the following scenario, the cockpit allows the driver to see several pieces of important information at a glance: a forward-collision warning, an alert that the car is exceeding the local speed limit by 12 mph, and map data with turn-by-turn navigation:

This design also aims to minimize the mental translation, or cognitive processing, needed on the part of the driver. For instance, if you exceed the speed limit, the cluster doesn’t simply show your current speed. It also displays a red line (visible immediately below the 52 mph readout) that gives you an immediately recognizable hint that you are going too fast. The more you exceed the limit, the thicker the red line grows.

The 26262 connection

Today’s digital instrument clusters require hardware and software solutions that can support rich graphics and high-level application environments while also displaying critical information (e.g. engine warning lights, ABS indicators) in a fast and highly reliable fashion. The need to isolate critical from non-critical software functions in the same environment is driving the requirement for ISO 26262 certification of digital clusters.

QNX OS technology, including the QNX OS for Safety, is ideally suited for environments where a combination of infotainment, advanced driver assistance system (ADAS), and safety-related information are displayed. Building a cluster with the ISO 26262 ASIL-D certified QNX OS for Safety can make it simpler to keep software functions isolated from each other and less expensive to certify the end cluster product.

The partner connection

Partnerships are also important. If you had the opportunity to drop by our booth at 2016 CES, you would have seen a “cluster innovation wall” that showcases QNX OS technology integrated with user interface design tools from the industry’s leading cluster software providers, including 3D Incorporated’s REMO HMI Runtime, Crank Software’s Storyboard Suite, DiSTI Corporation’s GL Studio, Elektrobit’s EB GUIDE, HI Corporation’s exbeans UI Conductor, and Rightware’s Kanzi UI software. This pre-integration with a rich choice of partner tools enables our customers to choose the user interface technologies and design approaches that best address their instrument cluster requirements.

For some partner insights on digital cluster design, check out these posts:

|

| Paul Leroux |

Answer: They would all look awesome in my driveway! But seriously, they all have digital instrument clusters powered by the QNX Neutrino OS.

QNX Software Systems has established a massive beachhead in automotive infotainment and telematics, with deployments in over 60 million cars. But it’s also moving into other growth areas of the car, including advanced driver assistance systems (ADAS), multi-function displays, and, of course, digital instrument clusters.

|

| Retrofitting the QNX reference vehicle with a new digital cluster. |

Now here’s the thing: digital clusters require higher-end CPUs and more software than their analog predecessors, not to mention large LCD panels. So why are automakers adopting them? Several reasons come to mind:

- Reusable — With a digital cluster, automakers can deploy the same hardware across multiple vehicle lines simply by reskinning the graphics.

- Simple — Digital clusters can help reduce driver distraction by displaying only the information that the driver currently requires.

- Scalable — Automakers can add functionality to a digital cluster by changing the software only; they don’t have to incur the cost of machining or adding new physical components.

- Attractive — A digital instrument cluster can enhance the appeal of a vehicle with eye-catching graphics and features.

2008: The first QNX cluster

It’s no coincidence that so many automakers are using the QNX Neutrino OS in their digital clusters. For years now, QNX Software Systems has been exploring how digital clusters can enhance the driving experience and developing technologies to address the requirements of cluster developers.

Let’s start with the very first digital cluster that the QNX team created, a proof-of-concept that debuted in 2008. Despite its vintage, this cluster has several things in common with our more recent clusters — note, for example, the integrated turn-by-turn navigation instructions:

For 2008, this was pretty cool. But as an early proof-of-concept, it lacked some niceties, such as visual cues that could suggest which information is, or isn’t, currently important. For instance, in this screenshot, the gauges for fuel level, engine temperature, and oil pressure all indicate normal operation, so they don’t need to be so prominent. They could, instead, be shrunk or dimmed until they need to alert the driver to a critical change — and indeed, we explored such ideas soon after we created the original design. As you’ll see, the ability to prioritize information for the driver becomes quite sophisticated in subsequent generations of our concept clusters.

Did you know? To create this 2008 cluster, QNX engineers used Adobe Flash Lite 3 and OpenGL ES.

2010: Concept cluster in a Chevrolet Corvette

Next up is the digital cluster in the first QNX technology concept car, based on a Chevrolet Corvette. If the cluster design looks familiar, it should: it’s modeled after the analog cluster that shipped in the 2010-era ‘Vettes. It’s a great example of how a digital instrument cluster can deliver state-of-the-art features, yet still honor the look-and-feel of an established brand. For example, here is the cluster in “standard” mode, showing a tachometer, just as it would in a stock Corvette:

And here it is again, but with something that you definitely wouldn’t find in a 2010 Corvette cluster — an integrated navigation app:

Did you know? The Corvette is the only QNX technology concept car that I ever got to drive.

2013: Concept cluster in a Bentley Continental GT

Next up is the digital cluster for the 2013 QNX technology concept car, based on a Bentley Continental GT. This cluster took the philosophy embodied in the Corvette cluster — honor the brand, but deliver forward-looking features — to the next level.

Are you familiar with the term Trompe-l’œil? It’s a French expression that means “deceive the eye” and it refers to art techniques that make 2D objects appear as if they are 3D objects. It’s a perfect description of the gorgeously realistic virtual gauges we created for the Bentley cluster:

Because it was digital, this cluster could morph itself on the fly. For instance, if you put the Bentley in Drive, the cluster would display a tach, gas gauge, temperature gauge, and turn-by-turn directions — the cluster pulled these directions from the head unit’s navigation system. And if you threw the car into Reverse, the cluster would display a video feed from the car’s backup camera. The cluster also had other tricks up its digital sleeve, such as displaying information from the car’s media player.

Did you know? The Bentley came equipped with a 616 hp W12 engine that could do 0-60 mph in a little over 4 seconds. Which may explain why they never let me drive it.

2014: Concept cluster in a Mercedes CLA45 AMG

|

| Plymouth safety speedometer, c 1939 |

But here’s the thing: the speedometer made its debut in 1939. And given the limitations of 1939 technology, the speedometer couldn’t take driving conditions or the local speed limit into account. So it always displayed the same warnings at the same speeds, no matter what the speed limit.

Connectivity to the rescue! Some modern navigation systems include information on local speed limits. By connecting the CLA45’s concept cluster to the navigation system in the car’s head unit, the QNX team was able to pull this information and display it in real time on the cluster, creating a modern equivalent of Plymouth's 1939 invention.

Look at the image below. You’ll see the local speed limit surrounded by a red circle, alerting the driver that they are breaking the limit. The cluster could also pull other information from the head unit, including turn-by-turn directions, trip information, album art, and other content normally relegated to the center display:

Did you know? Our Mercedes concept car is still alive and well in Germany, and recently made an appearance at the Embedded World conference in Nuremburg.

2015: Concept cluster in a Maserati Quattroporte

Up next is the 2015 QNX technology concept car, based on a Maserati Quattroporte GTS. Like the cluster in the Mercedes, this concept cluster provided speed alerts. But it could also recommend an appropriate speed for upcoming curves and warn of obstacles on the road ahead. It even provided intelligent parking assist to help you back into tight spaces.

Here is the cluster displaying a speed alert:

And here it is again, using input from a LiDAR system to issue a forward collision warning:

Did you know? Engadget selected the “digital mirrors” we created for the Maserati as a finalist for the Best of CES Awards 2015.

2015 and 2016: Concept clusters in QNX reference vehicle

The QNX reference vehicle, based on a Jeep Wrangler, is our go-to vehicle for showcasing the latest capabilities of the QNX CAR Platform for Infotainment. But it also does double-duty as a technology concept vehicle. For instance, in early 2015, we equipped the Jeep with a concept cluster that provides lane departure warnings, collision detection, and curve speed warnings. For instance, in this image, the cluster is recommending that you reduce speed to safely navigate an upcoming curve:

Just in time for CES 2016, the Jeep cluster got another makeover that added crosswalk notifications to the mix:

Did you know? Jeep recently unveiled the Trailcat, a concept Wrangler outfitted with a 707HP Dodge Hellcat engine.

2016: Glass cockpit in a Toyota Highlander

By now, you can see how advances in sensors, navigation databases, and other technologies enable us to integrate more information into a digital instrument cluster, all to keep the driver aware of important events in and around the vehicle. In our 2016 technology concept vehicle, we took the next step and explored what would happen if we did away with an infotainment system altogether and integrated everything — speed, RPM, ADAS alerts, 3D navigation, media control and playback, incoming phone calls, etc. — into a single cluster display.

By now, you can see how advances in sensors, navigation databases, and other technologies enable us to integrate more information into a digital instrument cluster, all to keep the driver aware of important events in and around the vehicle. In our 2016 technology concept vehicle, we took the next step and explored what would happen if we did away with an infotainment system altogether and integrated everything — speed, RPM, ADAS alerts, 3D navigation, media control and playback, incoming phone calls, etc. — into a single cluster display. On the one hand, this approach presented a challenge, because, well… we would be integrating everything into a single display! Things could get busy, fast. On the other hand, this approach presents everything of importance directly in front of the driver, where it is easiest to see. No more glancing over at a centrally mounted head unit.

Simplicity was the watchword. We had to keep distraction to a minimum, and to do that, we focused on two principles: 1) display only the information that the driver currently requires; and 2) use natural language processing as the primary way to control the user interface. That way, drivers can access infotainment content while keeping their hands on the wheel and eyes on the road.

For instance, in the following scenario, the cockpit allows the driver to see several pieces of important information at a glance: a forward-collision warning, an alert that the car is exceeding the local speed limit by 12 mph, and map data with turn-by-turn navigation:

This design also aims to minimize the mental translation, or cognitive processing, needed on the part of the driver. For instance, if you exceed the speed limit, the cluster doesn’t simply show your current speed. It also displays a red line (visible immediately below the 52 mph readout) that gives you an immediately recognizable hint that you are going too fast. The more you exceed the limit, the thicker the red line grows.

The 26262 connection

Today’s digital instrument clusters require hardware and software solutions that can support rich graphics and high-level application environments while also displaying critical information (e.g. engine warning lights, ABS indicators) in a fast and highly reliable fashion. The need to isolate critical from non-critical software functions in the same environment is driving the requirement for ISO 26262 certification of digital clusters.

QNX OS technology, including the QNX OS for Safety, is ideally suited for environments where a combination of infotainment, advanced driver assistance system (ADAS), and safety-related information are displayed. Building a cluster with the ISO 26262 ASIL-D certified QNX OS for Safety can make it simpler to keep software functions isolated from each other and less expensive to certify the end cluster product.

The partner connection

Partnerships are also important. If you had the opportunity to drop by our booth at 2016 CES, you would have seen a “cluster innovation wall” that showcases QNX OS technology integrated with user interface design tools from the industry’s leading cluster software providers, including 3D Incorporated’s REMO HMI Runtime, Crank Software’s Storyboard Suite, DiSTI Corporation’s GL Studio, Elektrobit’s EB GUIDE, HI Corporation’s exbeans UI Conductor, and Rightware’s Kanzi UI software. This pre-integration with a rich choice of partner tools enables our customers to choose the user interface technologies and design approaches that best address their instrument cluster requirements.

For some partner insights on digital cluster design, check out these posts:

- Elektrobit — Digital instrument clusters and the road to autonomous driving

- Crank — Reimagining digital instrument cluster design

- Rightware — Top 5 challenges of digital instrument clusters

- Disti — Bringing safety assurance to automotive instrument clusters

Selasa, 23 Februari 2016

QNX OS for Safety named best software product at Embedded World

“Winning takes talent, to repeat takes character” — legendary basketball coach John Wooden

Earlier today, at Embedded World 2016, QNX won an embedded AWARD for its QNX OS for Safety, an operating system designed for safety-critical applications in the automotive, rail transportation, healthcare, and industrial automation markets. The OS was named best product in the software category.

This award win is a testament to the commitment and integrity that drives QNX to continuously release world-class products. In fact, this marks the fourth time that QNX Software Systems has won an embedded AWARD. In 2014, it took top honors for QNX Acoustics for Active Noise Control (ANC), a software library that cancels out distracting engine noise in cars while eliminating the dedicated hardware required by conventional ANC solutions. The company also won in 2006 for its multicore-enabled operating system and development tools, and in 2004 for power management technology.

The QNX OS for Safety is built on a highly reliable software architecture proven in nuclear power plants, train control systems, laser eye-surgery devices, and a variety of other safety-critical environments. It was created to meet the rigorous IEC 61508 functional safety standard as well as industry-specific standards based on IEC 61508. These include ISO 26262 for passenger vehicles, EN 50128 for railway applications, IEC 62304 for medical devices, and IEC 61511 for factory automation, process control, and robotics.

Hats off to the many talented QNX staffers responsible for developing, certifying, promoting, and selling the QNX OS for Safety!

|

| Patryk Fournier |

This award win is a testament to the commitment and integrity that drives QNX to continuously release world-class products. In fact, this marks the fourth time that QNX Software Systems has won an embedded AWARD. In 2014, it took top honors for QNX Acoustics for Active Noise Control (ANC), a software library that cancels out distracting engine noise in cars while eliminating the dedicated hardware required by conventional ANC solutions. The company also won in 2006 for its multicore-enabled operating system and development tools, and in 2004 for power management technology.

The QNX OS for Safety is built on a highly reliable software architecture proven in nuclear power plants, train control systems, laser eye-surgery devices, and a variety of other safety-critical environments. It was created to meet the rigorous IEC 61508 functional safety standard as well as industry-specific standards based on IEC 61508. These include ISO 26262 for passenger vehicles, EN 50128 for railway applications, IEC 62304 for medical devices, and IEC 61511 for factory automation, process control, and robotics.

Hats off to the many talented QNX staffers responsible for developing, certifying, promoting, and selling the QNX OS for Safety!

|

| The media scrum at today's award ceremony. |

Selasa, 26 Januari 2016

Award season

|

| Patryk Fournier |

In the category of Best Backhaul Software or Development Platform for Automakers, the winner is… QNX Software Systems.

Thank you so much to Auto Connected Car News and all the people and companies who voted for us in the Tech CARS Awards. We pride ourselves on offering flexible development platforms that enable automakers to deliver unique, branded experiences. Working with leading-edge automakers and Tier 1 suppliers drives us (pardon the pun) to continue upping our game in advanced platforms for infotainment, digital instrument clusters, advanced driver assistance systems (ADAS), and acoustics — including, of course, the recently announced QNX Platform for ADAS and QNX Acoustics Management Platform.

We would also like to congratulate our fellow award winners, Ford and Harman. Ford won for Overall Best Car Infotainment Software by Automaker for their QNX-powered SYNC 3 connectivity system.

And speaking of Ford, the GSMA Global Mobile Awards recently announced their shortlist of finalists. And we just happen to be a finalist in the category of Best Mobile Innovation for Automotive for our work in Ford SYNC 3.

|

| QNX-powered Ford SYNC 3: Shortlisted for a 2016 Glomo Award. Source: Ford |

Selasa, 12 Januari 2016

“I don’t know where I’m going from here, but I promise it won’t be boring”

|

| Patryk Fournier |

CES 2016 stretched into the weekend this year and ICYMI there was a lot of compelling media coverage of QNX and BlackBerry. Here’s a roundup of the most interesting coverage from the weekend:

ARS Technica: QNX demos new acoustic and ADAS technologies

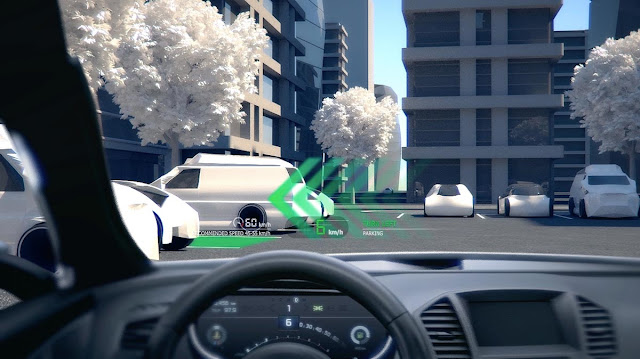

The crew from ARSTechnica filmed a terrific demonstration of the QNX Acoustics Management Platform and the QNX Platform for ADAS. The demonstration highlights the power and versatility of the acoustics platform, including the QNX In-Car Communication module, which allows the driver to effortlessly speak to passengers in the back of the vehicle, over the roar of an engine revving at high speed. The demonstration also showcases how the QNX OS can support augmented reality and heads-up displays:

Huffington Post: CES 2016 Proves The Future Of Driverless Cars Is Promising

Huffington Post highlighted BlackBerry and QNX as key newsmakers for advancements in driverless cars. The article notes QNX’s automotive leadership: “The software is actually installed in 50 per cent of the world’s automotive infotainment systems including Audi, Volkswagen, Ford, GM and Chrysler.”

Crackberry: Inside the QNX Toyota Highlander at CES 2016

The folks at CrackBerry filmed a demonstration of our latest technology concept vehicle, based on a Toyota Highlander. The demo focuses on the QNX In-Car Communication acoustics module, which forms part of the recently launched QNX Acoustics Management Platform:

HERE 360: QNX and HERE bring to life a multi-screen experience in vehicles

A blog post from our ecosystem partner mentions HERE navigation and its use in the Toyota Highlander and Jeep Wrangler technology concept vehicles.

Kamis, 07 Januari 2016

Why is software the key to bringing augmented reality to cars?

Guest post by Alex Leonov, marketing director, Luxoft Automotive.

While self-driving vehicles are gradually becoming a reality, more and more of today’s cars roll out from factories featuring advanced driver assistance systems (ADAS). We are quickly getting used to adaptive cruise control, blind spot monitoring, parking assistance, lane departure warning, and many other features that make driving safer and the driver’s job easier. Data from cameras, sensors, and V2X infrastructure feed into ADAS systems, increasing their accuracy and efficiency. These systems are important steps toward fully autonomous driving, but the ultimate responsibility for decision making still lies with a driver.

The more that cars become connected, the more the average driver can be bombarded by information while driving. “In 500 feet make a right turn.” “You have an incoming call from Christine.” “You have a new message on Facebook.” “You are over the speed limit.” This may not be so big of a distraction under normal conditions. But sometimes, when driving in hectic city traffic or in a snow storm, it is critical to keep eyes on the road, while still receiving essential information. The good news is, the technology is already there to remedy this.

Heads up for HUDs

Keeping the driver’s eyes on the road is a priority, and head-up displays (HUDs) can accomplish just that. They project alerts and navigation prompts right on the windshield. Analysts predict an explosive growth of HUDs with the market reaching close to US$100 billion by 2020. The bulk of HUDs are relatively simple combiners, but more advances in wide-field-of-view HUDs are coming soon.

HUDs are perfect for presenting information in a convenient, natural way, and giving the driver a feeling of being in control. But HUDs are only as good as the information they display. That is why it is critical to have solid and reliable data processing and decision-making algorithms, running on a reliable OS, that can prioritize and filter data. The resulting alerts and prompts must be communicated to a driver in a clear, transparent way.

Computer vision, also known as machine vision, is a key to processing the endless flow of data. With its human-like image recognition ability, computer vision processes road scenes, and the system fuses data from multiple sources. Add in a natural representation of processing outcomes in the form of augmented reality, while tracking driver’s pupils, and you have a completely new level of driver’s experience — safe and intuitive.

Next-generation driving experience

At Luxoft, we’ve been working on making this experience a reality. The result is CVNAR, a computer vision and augmented reality solution. CVNAR is a powerful software framework containing mathematical algorithms that process a vast amount of road data in real time to generate intuitive prompts and alerts. CVNAR has built-in algorithms for road and pedestrian detection, vehicle recognition and tracking, lane detection, facade recognition and texture extraction, road sign recognition, and parking space search. It performs relative and absolute positioning and easily integrates with navigation, the map database, sensors, and other data sources. A unique feature of CVNAR is its extrapolation engine for latency avoidance.

CVNAR works perfectly with LCD displays and smartglasses, but it is ultimately built for HUDs. Data from cameras, sensors, CAN, and navigation maps are fused and processed to create an extendable metadata output that describes all augmented objects. It takes a HUD and an eye-tracking camera to implement CVNAR in a vehicle. CVNAR will track the driver’s gaze and adjust the position of the augmented objects in the driver’s line of sight to make sure they don’t obstruct anything important — all in real time.

This is not all that CVNAR can do. New car models come packed with infotainment features that take time to learn and memorize. The CVNAR-based smartphone app can help. It turns your smartphone into an interactive guide. Point your phone camera to your dashboard and use augmented prompts to find out more about a particular car function. It can work under the hood, too.

Era of a software-defined car

A modern car runs on code as much as it runs on gasoline (or a battery-powered electric motor). Today, it takes over 100 million lines of software code to get a premium car going, and the amount of software necessary keeps expanding. At Luxoft, we are excited about the car’s digital future, and we work every day to help bring it about, by developing cutting-edge automotive solutions for leading global vehicle manufacturers.

Offering a wide range of embedded software development and integration services for in-vehicle infotainment and telematics systems, digital instrument clusters, and head-up displays, Luxoft has developed User Experience (UX) and Human Machine Interface (HMI) technology for millions of vehicles on the road today. We push the envelope of technology in such areas as situation-aware HMI, computer vision and augmented reality, while Luxoft’s products, the Populus and Teora UX and HMI design tool chains, power the development of award-winning automotive HMIs and slash time to market.

Software holds the key to the future of cars. It is essential to creating a customized user experience in vehicles. With over-the-air updates, software offers unmatched flexibility and scalability. Finally, it takes safety to the next level with its ability to simulate human-like logic through complex algorithms.

You can view Luxoft’s CVNAR solution running on a QNX-based ADAS demo this week at CES, in the BlackBerry booth: LVCC North Hall, #325.

About Alex

Alex Leonov has been in the automotive and IT industry for over 18 years in various business development and marketing roles. Currently, Alex leads the global marketing efforts of Luxoft Automotive.

While self-driving vehicles are gradually becoming a reality, more and more of today’s cars roll out from factories featuring advanced driver assistance systems (ADAS). We are quickly getting used to adaptive cruise control, blind spot monitoring, parking assistance, lane departure warning, and many other features that make driving safer and the driver’s job easier. Data from cameras, sensors, and V2X infrastructure feed into ADAS systems, increasing their accuracy and efficiency. These systems are important steps toward fully autonomous driving, but the ultimate responsibility for decision making still lies with a driver.

The more that cars become connected, the more the average driver can be bombarded by information while driving. “In 500 feet make a right turn.” “You have an incoming call from Christine.” “You have a new message on Facebook.” “You are over the speed limit.” This may not be so big of a distraction under normal conditions. But sometimes, when driving in hectic city traffic or in a snow storm, it is critical to keep eyes on the road, while still receiving essential information. The good news is, the technology is already there to remedy this.

Heads up for HUDs

Keeping the driver’s eyes on the road is a priority, and head-up displays (HUDs) can accomplish just that. They project alerts and navigation prompts right on the windshield. Analysts predict an explosive growth of HUDs with the market reaching close to US$100 billion by 2020. The bulk of HUDs are relatively simple combiners, but more advances in wide-field-of-view HUDs are coming soon.

|

| Projecting alerts and navigation prompts directly on the windshield. |

Computer vision, also known as machine vision, is a key to processing the endless flow of data. With its human-like image recognition ability, computer vision processes road scenes, and the system fuses data from multiple sources. Add in a natural representation of processing outcomes in the form of augmented reality, while tracking driver’s pupils, and you have a completely new level of driver’s experience — safe and intuitive.

Next-generation driving experience

At Luxoft, we’ve been working on making this experience a reality. The result is CVNAR, a computer vision and augmented reality solution. CVNAR is a powerful software framework containing mathematical algorithms that process a vast amount of road data in real time to generate intuitive prompts and alerts. CVNAR has built-in algorithms for road and pedestrian detection, vehicle recognition and tracking, lane detection, facade recognition and texture extraction, road sign recognition, and parking space search. It performs relative and absolute positioning and easily integrates with navigation, the map database, sensors, and other data sources. A unique feature of CVNAR is its extrapolation engine for latency avoidance.

|

| Detecting and recognizing road signs, pedestrians, traffic lanes, gas stations, and other objects. |

|

| Alerting the driver to an empty parking spot. |

Era of a software-defined car

A modern car runs on code as much as it runs on gasoline (or a battery-powered electric motor). Today, it takes over 100 million lines of software code to get a premium car going, and the amount of software necessary keeps expanding. At Luxoft, we are excited about the car’s digital future, and we work every day to help bring it about, by developing cutting-edge automotive solutions for leading global vehicle manufacturers.

Offering a wide range of embedded software development and integration services for in-vehicle infotainment and telematics systems, digital instrument clusters, and head-up displays, Luxoft has developed User Experience (UX) and Human Machine Interface (HMI) technology for millions of vehicles on the road today. We push the envelope of technology in such areas as situation-aware HMI, computer vision and augmented reality, while Luxoft’s products, the Populus and Teora UX and HMI design tool chains, power the development of award-winning automotive HMIs and slash time to market.

Software holds the key to the future of cars. It is essential to creating a customized user experience in vehicles. With over-the-air updates, software offers unmatched flexibility and scalability. Finally, it takes safety to the next level with its ability to simulate human-like logic through complex algorithms.

You can view Luxoft’s CVNAR solution running on a QNX-based ADAS demo this week at CES, in the BlackBerry booth: LVCC North Hall, #325.

About Alex

Alex Leonov has been in the automotive and IT industry for over 18 years in various business development and marketing roles. Currently, Alex leads the global marketing efforts of Luxoft Automotive.

In the zone — a visit to the QNX concept garage

Guest post by QNX consultant and software designer Rob Krten.

How often have you heard the expression, “If it were easy to do, everyone would do it”? I’m constantly amazed at the things that QNX does with their concept cars. To me, a car is an inviolate object that must be touched only by the dealer (well, ok, I do top up the windshield wiper fluid and I once changed a battery). I don’t say that because I necessarily like to give the dealer money, but I just don’t want to break anything that’ll cost me more to get fixed properly later.

Pushing the envelope, however, means getting right in there and doing stuff. QNX engineers have done this for their technology concept cars — from replacing the mirrors with LCD screens, to getting right into the dash and rebuilding it, to adding cameras into the antenna fin on the roof. It’s nothing for them to rip out the center console and then look at all the wiring and go, “Huh, ok — so we need to lengthen this wire, add a shim here, move this piece,” and so on. They are fearless.

Sometimes the “getting right in there” is physical; other times, it’s software based — such as making a new application that lives in the infotainment stack or that interfaces with a smartphone. Like a “Dude, where’s my car?” feature — when your Bluetooth phone unpairs with your car, the phone records the current GPS position. Later, when you’re looking for your car, your phone can recall this last stored GPS position — this must be where you left your car. Or even simple aids, such as a radio tuner that detects when you are losing an AM/FM signal and automatically switches to the corresponding digital station, so you can continue listening to your favorite station anywhere you drive.

Curious to see what the future holds, and to actually see some of this work in action, I invited myself down to the “garage” at QNX headquarters. It’s at the far end of the building, next to the cafeteria. The hallway is festooned with posters of previous QNX concept vehicles, highlighting success stories in 3-foot-high glory.

The day I visited, there were half a dozen people in the garage, and two vehicles: a Jeep and a Highlander (otherwise known as the QNX reference vehicle and QNX technology concept vehicle). The garage is a combination of software development lab, hardware development lab, simulation environment, and actual garage (but without the greasy/oily smell). I wanted to get a sense of what drives these people, what they do, and how they do it.

Digital analogs

The first thing I learned was that there are no real limits. They have the freedom to innovate, without preconceived notions about how things should look. For example, a lead designer on the team (let’s call him Allan, because that’s his name), explained how they look at the controls in the car’s dash display area. In the era of analog, the speedometer had a certain look — it was usually a needle rotating about a central point, where the needle pointed to the speed you were going. In the very early era of digitization, car manufacturers changed this needle to a seven-segment numerical display.

Of course, this was a failure, because the human brain is basically analog; it likes to see nice, continuous changes for processes that are continuous — such as the speed that you’re going. Seven-segment digits change too “randomly”; they require higher-level cognitive functions to parse what the individual lights mean and convert that into digits, and then convert that into a “speed” (and then convert that into “too slow,” or “just right,” or “too fast,” and then, finally, convert that into “apply brake” or “press down on throttle”).

Allan pointed out that changing to a digital display didn’t necessarily mean that they have to slavishly follow the analog “physical” appearance (except do it on an LCD display), but that they were free to experiment with “fill concepts” — digitally controlled analogs to the actual controls. We likened it to the displays in military avionics, where the most important information becomes bigger as it increases in importance. Consider a fighter jet at 20,000 feet — the altitude isn’t nearly as important as it as at 300 feet. Therefore, at 20,000 feet, the part showing the altitude is small, and in a less prominent position than it is when the plane is at 300 feet. The same thing with your speedometer: if you’re doing the speed limit, it’s not as important to show your current speed (you’re most likely flowing with traffic) as it is when you’re 20 over (or under).

You could do the same thing with your fuel range — when you have a full tank, the indicator can be off in a corner somewhere. But as you start to run low, the indicator can get bigger or more prominent, to start nagging you to refuel. By having the displays all be “virtual” on a large LCD screen in the dash, the designers have incredible flexibility to create systems that present relevant information when required, and have it move out of the way when something more important comes along. (Come to think of it, this would be an awesome feature to have on turn-signal indicators — after you’ve kept your blinker on for more than 10 seconds, it would start to get bigger and brighter. Maybe then people would stop driving with their turn indicator permanently on.)

Collision avoided: The V2X command center

Also in the lab was a huge (3 by 5 foot) flat-panel touchscreen, mounted at an angle that’s aggressively unfriendly to coffee cups (probably for that very reason). It’s reminiscent of Star Trek’s main transporter control station, but it’s used to control and display the simulation environment’s V2V (vehicle to vehicle) and V2I (vehicle to infrastructure) data. It acts as a command center to control and reveal the innards of what’s going on in the simulation environment:

When I was there, we ran a vehicle collision avoidance scenario. Two vehicles (the Jeep and the Highlander, of course — they’re tied in to the system) were heading on a collision course (one was southbound and one was eastbound in a grid-style road system). Because they have V2V capabilities, both cars were aware of their impending doom. This showed up nicely on the V2V command center control panel — two cars heading towards each other, little red circles emanating from them indicating the realtime V2V “pings.” Of course, in plenty of time, the Jeep slowed down to avoid the collision (the actual brake lights even went on!). The speed, GPS coordinates, direction, and even what gear each vehicle was in were all shown on the master console. Towards the end of my visit I almost had Allan convinced to do another master control console for the OBDII connector so you could interact with all of the information in each car. What can I say? I like front panels. (I’m a reformed PDP-8 collector.)

The engineers in the concept garage are “in the zone.” They’re working in an environment that encourages innovation. Watch and see what they produce:

About Rob

Rob is president of Iron Krten Consulting, which provides technical leadership services, from software leadership consulting through to security and embedded software products, development, training and contract services. Rob is also engaged by QNX Software Systems to write marketing and technical documentation. Visit Rob's website.

How often have you heard the expression, “If it were easy to do, everyone would do it”? I’m constantly amazed at the things that QNX does with their concept cars. To me, a car is an inviolate object that must be touched only by the dealer (well, ok, I do top up the windshield wiper fluid and I once changed a battery). I don’t say that because I necessarily like to give the dealer money, but I just don’t want to break anything that’ll cost me more to get fixed properly later.

Pushing the envelope, however, means getting right in there and doing stuff. QNX engineers have done this for their technology concept cars — from replacing the mirrors with LCD screens, to getting right into the dash and rebuilding it, to adding cameras into the antenna fin on the roof. It’s nothing for them to rip out the center console and then look at all the wiring and go, “Huh, ok — so we need to lengthen this wire, add a shim here, move this piece,” and so on. They are fearless.

|

| Redoing the dash of the QNX reference vehicle. |

Curious to see what the future holds, and to actually see some of this work in action, I invited myself down to the “garage” at QNX headquarters. It’s at the far end of the building, next to the cafeteria. The hallway is festooned with posters of previous QNX concept vehicles, highlighting success stories in 3-foot-high glory.

The day I visited, there were half a dozen people in the garage, and two vehicles: a Jeep and a Highlander (otherwise known as the QNX reference vehicle and QNX technology concept vehicle). The garage is a combination of software development lab, hardware development lab, simulation environment, and actual garage (but without the greasy/oily smell). I wanted to get a sense of what drives these people, what they do, and how they do it.

Digital analogs

|

No, not that kind of digital display. Credit: Peter Halasz |

Of course, this was a failure, because the human brain is basically analog; it likes to see nice, continuous changes for processes that are continuous — such as the speed that you’re going. Seven-segment digits change too “randomly”; they require higher-level cognitive functions to parse what the individual lights mean and convert that into digits, and then convert that into a “speed” (and then convert that into “too slow,” or “just right,” or “too fast,” and then, finally, convert that into “apply brake” or “press down on throttle”).

Allan pointed out that changing to a digital display didn’t necessarily mean that they have to slavishly follow the analog “physical” appearance (except do it on an LCD display), but that they were free to experiment with “fill concepts” — digitally controlled analogs to the actual controls. We likened it to the displays in military avionics, where the most important information becomes bigger as it increases in importance. Consider a fighter jet at 20,000 feet — the altitude isn’t nearly as important as it as at 300 feet. Therefore, at 20,000 feet, the part showing the altitude is small, and in a less prominent position than it is when the plane is at 300 feet. The same thing with your speedometer: if you’re doing the speed limit, it’s not as important to show your current speed (you’re most likely flowing with traffic) as it is when you’re 20 over (or under).

|

| In this image from the new QNX technology concept vehicle, the digital instrument cluster is warning that a forward collision is imminent, and that the driver is exceeding the speed limit by 12 mph. |

You could do the same thing with your fuel range — when you have a full tank, the indicator can be off in a corner somewhere. But as you start to run low, the indicator can get bigger or more prominent, to start nagging you to refuel. By having the displays all be “virtual” on a large LCD screen in the dash, the designers have incredible flexibility to create systems that present relevant information when required, and have it move out of the way when something more important comes along. (Come to think of it, this would be an awesome feature to have on turn-signal indicators — after you’ve kept your blinker on for more than 10 seconds, it would start to get bigger and brighter. Maybe then people would stop driving with their turn indicator permanently on.)

Collision avoided: The V2X command center

Also in the lab was a huge (3 by 5 foot) flat-panel touchscreen, mounted at an angle that’s aggressively unfriendly to coffee cups (probably for that very reason). It’s reminiscent of Star Trek’s main transporter control station, but it’s used to control and display the simulation environment’s V2V (vehicle to vehicle) and V2I (vehicle to infrastructure) data. It acts as a command center to control and reveal the innards of what’s going on in the simulation environment:

When I was there, we ran a vehicle collision avoidance scenario. Two vehicles (the Jeep and the Highlander, of course — they’re tied in to the system) were heading on a collision course (one was southbound and one was eastbound in a grid-style road system). Because they have V2V capabilities, both cars were aware of their impending doom. This showed up nicely on the V2V command center control panel — two cars heading towards each other, little red circles emanating from them indicating the realtime V2V “pings.” Of course, in plenty of time, the Jeep slowed down to avoid the collision (the actual brake lights even went on!). The speed, GPS coordinates, direction, and even what gear each vehicle was in were all shown on the master console. Towards the end of my visit I almost had Allan convinced to do another master control console for the OBDII connector so you could interact with all of the information in each car. What can I say? I like front panels. (I’m a reformed PDP-8 collector.)

The engineers in the concept garage are “in the zone.” They’re working in an environment that encourages innovation. Watch and see what they produce:

About Rob

Rob is president of Iron Krten Consulting, which provides technical leadership services, from software leadership consulting through to security and embedded software products, development, training and contract services. Rob is also engaged by QNX Software Systems to write marketing and technical documentation. Visit Rob's website.

Video: Paving the way to an autonomous future

|

| Lynn Gayowski |

The video below follows the journey of building our vehicles for CES 2016 and highlights the technologies we’re using to speed progress towards automated driving — and the list of tech that QNX covers is impressive! It includes advanced driver assistance systems (ADAS), V2X, and augmented reality, not to mention digital instrument clusters, in-car communication, and infotainment:

QNX Software Systems continues to innovate in automotive, with a vision for the evolution of automated driving and a trusted foundation for building reliable, adaptable systems. At risk of giving away the big finale, I think John Wall, head of QNX, sums up perfectly what QNX is on target for in the automotive industry: “We will dominate the cockpit of the car.” It’s a bold statement but we’re already amassing some imposing stats that back this up:

Label:

Acoustic processing,

ADAS,

Augmented reality,

Autonomous cars,

CES,

Concept car,

Concept team,

Jeep Wrangler,

Lynn Gayowski,

QNX Garage,

QNX OS,

Reference vehicle,

Toyota,

V2X

Rabu, 06 Januari 2016

The simpler, the better: a first look at the new QNX technology concept vehicle

Bringing the KISS principle to the dashboard.

“From sensors to smartphones, the car is experiencing a massive influx of new technologies, and automakers must blend these in a way that is simple, helpful, and non-distracting.” That statement comes from a press release we issued a year ago, but it’s as true today as it was then — if not more so. The fact is, the car is undergoing a massive transformation as it becomes more connnected and more automated. And with that transformation comes higher volumes of data and greater system complexity.

But here’s the thing. From the driver’s perspective, this complexity doesn’t matter, nor should it matter. In fact, it can’t matter. Because the driver needs to stay focused on the most important thing: driving. (At least until fully automated driving becomes reality, at which point a nap might be in order!) Consequently, it’s the job of automakers and their suppliers to harness all these technologies in a simple, intuitive way that makes driving easier, safer, and more enjoyable. Specifically, they need to provide the driver with relevant, contextually sensitive information that is easy to consume, without causing distraction.

That is the challenge that the new QNX technology concept vehicle, based on a Toyota Highlander, sets out to explore.

So what are we waiting for? Let’s take a look! (And remember, you can click on any image to magnify it.)

The oh-so-glossy exterior

As with any QNX technology concept vehicle, it’s what’s inside that counts. But to signal that this is no ordinary Highlander, we gave the exterior a luxurious, brushed-metal finish that just screams to have its picture taken. So we obliged:

The integrated display that keeps you focused

When modifying the Highlander, simplicity was the watchword. So instead of equipping the vehicle with both a digital instrument cluster and a head unit, we created a “glass cockpit” that combines the functions of both systems, along with ADAS safety alerts, into one seamless display. Everything is presented directly in front of the driver, where it is easiest to see.

For instance, in the following scenario, the cockpit allows the driver to see several pieces of important information at a glance: a forward-collision warning, an alert that the car is exceeding the local speed limit by 12 mph, and turn-by-turn navigation:

Mind you, the cockpit can display much more information than you see here, including a tachometer, album art, incoming phone calls, and the current radio station. But to keep distraction to a minimum, it displays only the information that the driver currently requires, and no more. Because simplicity.

To further minimize distraction, the cockpit uses voice as the primary way to control the user interface, including control of media, navigation, and phone connectivity. As a result, drivers can access infotainment content while keeping their hands on the wheel and eyes on the road.

Thoughtful touches abound. For instance, the HERE Auto navigation software running in the cockpit interfaces with a HERE Auto Companion App running on a BlackBerry PRIV smartphone. So when the driver steps into the vehicle, navigation route information from the smartphone is transferred automatically to the vehicle, providing a continuous user experience. How cool is that?

Here’s a slightly different view of the cockpit, showing how it can display a photo of your destination — just the thing when you are driving to a location for the first time and would like visual confirmation of what it looks like:

Before I forget, here are some additional tech specs: the cockpit is built on the QNX CAR Platform for Infotainment, uses an interface based on Qt 5.5, integrates iHeartRadio, and runs on a Renesas R-Car H2 system-on-chip.

The acoustics feature that keeps you from shouting

The glass cockpit does a great job of keeping your eyes focused straight ahead. But what’s the use of that if, as a driver, you have to turn your head every time you want to speak to someone in the back seat? If you’ve ever struggled to hold a conversation in a car at highway speeds, especially in a larger vehicle, you know what I’m talking about.

QNX acoustics to the rescue! Earlier today, QNX Software Systems announced the QNX Acoustics Management Platform, a new solution that replaces the traditional piecemeal approach to in-car acoustics with a holistic model that enables faster-time-to-production and lower system costs. The platform comes with several innovative features, including QNX In-Car Communication (ICC) technology, which enhances the voice of the driver and relays it to infotainment loudspeakers in the rear of the car.

Long story short: instead of shouting or having to turn around to be heard, the driver can talk normally while keeping his or her eyes on the road. QNX ICC dynamically adapts to noise conditions and adds enhancement only when needed. Better yet, it allows automakers to leverage their existing handsfree telephony microphones and infotainment loudspeakers.

The reference vehicle that keeps evolving

Before you go, I also want to share some updates to the QNX reference vehicle, which is based on a Jeep Wrangler. Like the Highlander, the Jeep got a slick new exterior for CES 2016:

Since 2012, the Jeep has been our go-to vehicle for showcasing the latest capabilities of the QNX CAR Platform for Infotainment. But for over a year now, it has done double-duty as a concept vehicle, showing how QNX technology can help developers build next-generation instrument clusters and ADAS solutions.

Take, for example, the Jeep’s new instrument cluster, which makes its debut this week at CES. In addition to providing all the information that you’d expect, such as speed and RPM, it displays crosswalk notifications, forward collision warnings, speed limit warnings, and turn-by-turn navigation:

The QNX reference vehicle also includes a full-featured head unit that demonstrates the latest out-of-the-box capabilities of the QNX CAR Platform for Infotainment. For example, in this image, the head unit is displaying HERE Auto navigation:

Other features of the platform include:

Additional tech specs: The Jeep’s cluster runs on a Qualcomm Snapdragon 602A processor and its user interface was designed by our partner Rightware, using the Rightware Kanzi tool. The head unit, meanwhile, runs on an Intel Atom E3827 processor.

ADAS, augmented reality, V2X, IoT, and more

I have only scratched the surface of what BlackBerry and QNX Software Systems are demonstrating this week at CES 2016. There’s much more to see and experience, including a very cool V2X demonstration, IoT solutions for the automotive and transportation industries, as well as ADAS and augmented reality systems that integrate with the digital clusters described in this post. To learn more, read the press release that QNX issued today and stay tuned to this channel.

|

| Paul Leroux |

But here’s the thing. From the driver’s perspective, this complexity doesn’t matter, nor should it matter. In fact, it can’t matter. Because the driver needs to stay focused on the most important thing: driving. (At least until fully automated driving becomes reality, at which point a nap might be in order!) Consequently, it’s the job of automakers and their suppliers to harness all these technologies in a simple, intuitive way that makes driving easier, safer, and more enjoyable. Specifically, they need to provide the driver with relevant, contextually sensitive information that is easy to consume, without causing distraction.

That is the challenge that the new QNX technology concept vehicle, based on a Toyota Highlander, sets out to explore.

So what are we waiting for? Let’s take a look! (And remember, you can click on any image to magnify it.)

The oh-so-glossy exterior

As with any QNX technology concept vehicle, it’s what’s inside that counts. But to signal that this is no ordinary Highlander, we gave the exterior a luxurious, brushed-metal finish that just screams to have its picture taken. So we obliged:

The integrated display that keeps you focused

When modifying the Highlander, simplicity was the watchword. So instead of equipping the vehicle with both a digital instrument cluster and a head unit, we created a “glass cockpit” that combines the functions of both systems, along with ADAS safety alerts, into one seamless display. Everything is presented directly in front of the driver, where it is easiest to see.

For instance, in the following scenario, the cockpit allows the driver to see several pieces of important information at a glance: a forward-collision warning, an alert that the car is exceeding the local speed limit by 12 mph, and turn-by-turn navigation:

Mind you, the cockpit can display much more information than you see here, including a tachometer, album art, incoming phone calls, and the current radio station. But to keep distraction to a minimum, it displays only the information that the driver currently requires, and no more. Because simplicity.

To further minimize distraction, the cockpit uses voice as the primary way to control the user interface, including control of media, navigation, and phone connectivity. As a result, drivers can access infotainment content while keeping their hands on the wheel and eyes on the road.

Thoughtful touches abound. For instance, the HERE Auto navigation software running in the cockpit interfaces with a HERE Auto Companion App running on a BlackBerry PRIV smartphone. So when the driver steps into the vehicle, navigation route information from the smartphone is transferred automatically to the vehicle, providing a continuous user experience. How cool is that?

Here’s a slightly different view of the cockpit, showing how it can display a photo of your destination — just the thing when you are driving to a location for the first time and would like visual confirmation of what it looks like:

Before I forget, here are some additional tech specs: the cockpit is built on the QNX CAR Platform for Infotainment, uses an interface based on Qt 5.5, integrates iHeartRadio, and runs on a Renesas R-Car H2 system-on-chip.

The acoustics feature that keeps you from shouting

The glass cockpit does a great job of keeping your eyes focused straight ahead. But what’s the use of that if, as a driver, you have to turn your head every time you want to speak to someone in the back seat? If you’ve ever struggled to hold a conversation in a car at highway speeds, especially in a larger vehicle, you know what I’m talking about.

QNX acoustics to the rescue! Earlier today, QNX Software Systems announced the QNX Acoustics Management Platform, a new solution that replaces the traditional piecemeal approach to in-car acoustics with a holistic model that enables faster-time-to-production and lower system costs. The platform comes with several innovative features, including QNX In-Car Communication (ICC) technology, which enhances the voice of the driver and relays it to infotainment loudspeakers in the rear of the car.

Long story short: instead of shouting or having to turn around to be heard, the driver can talk normally while keeping his or her eyes on the road. QNX ICC dynamically adapts to noise conditions and adds enhancement only when needed. Better yet, it allows automakers to leverage their existing handsfree telephony microphones and infotainment loudspeakers.