Bringing the KISS principle to the dashboard. |

| Paul Leroux |

“From sensors to smartphones, the car is experiencing a massive influx of new technologies, and automakers must blend these in a way that is simple, helpful, and non-distracting.” That statement comes from a press release we issued a year ago, but it’s as true today as it was then — if not more so. The fact is, the car is undergoing a massive transformation as it becomes more connnected and more automated. And with that transformation comes higher volumes of data and greater system complexity.

But here’s the thing. From the driver’s perspective, this complexity doesn’t matter, nor should it matter. In fact, it

can’t matter. Because the driver needs to stay focused on the most important thing: driving. (At least until fully automated driving becomes reality, at which point a nap might be in order!) Consequently, it’s the job of automakers and their suppliers to harness all these technologies in a simple, intuitive way that makes driving easier, safer, and more enjoyable. Specifically, they need to provide the driver with relevant, contextually sensitive information that is easy to consume, without causing distraction.

That is the challenge that the new QNX technology concept vehicle, based on a Toyota Highlander, sets out to explore.

So what are we waiting for? Let’s take a look! (And remember, you can click on any image to magnify it.)

The oh-so-glossy exteriorAs with any QNX technology concept vehicle, it’s what’s inside that counts. But to signal that this is no ordinary Highlander, we gave the exterior a luxurious, brushed-metal finish that just screams to have its picture taken. So we obliged:

The integrated display that keeps you focused

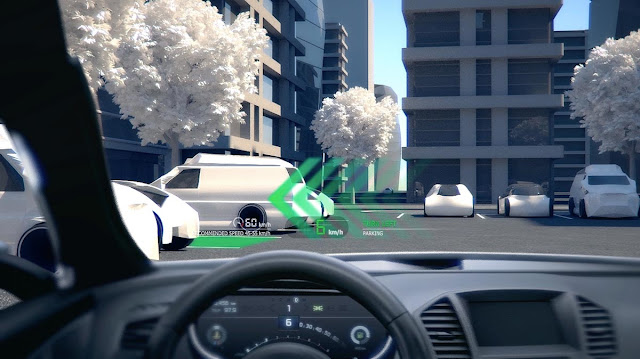

The integrated display that keeps you focusedWhen modifying the Highlander, simplicity was the watchword. So instead of equipping the vehicle with both a digital instrument cluster and a head unit, we created a “glass cockpit” that combines the functions of both systems, along with ADAS safety alerts, into one seamless display. Everything is presented directly in front of the driver, where it is easiest to see.

For instance, in the following scenario, the cockpit allows the driver to see several pieces of important information at a glance: a forward-collision warning, an alert that the car is exceeding the local speed limit by 12 mph, and turn-by-turn navigation:

Mind you, the cockpit can display much more information than you see here, including a tachometer, album art, incoming phone calls, and the current radio station. But to keep distraction to a minimum, it displays only the information that the driver currently requires, and no more. Because simplicity.

To further minimize distraction, the cockpit uses voice as the primary way to control the user interface, including control of media, navigation, and phone connectivity. As a result, drivers can access infotainment content while keeping their hands on the wheel and eyes on the road.

Thoughtful touches abound. For instance, the HERE Auto navigation software running in the cockpit interfaces with a HERE Auto Companion App running on a BlackBerry PRIV smartphone. So when the driver steps into the vehicle, navigation route information from the smartphone is transferred automatically to the vehicle, providing a continuous user experience. How cool is that?

Here’s a slightly different view of the cockpit, showing how it can display a photo of your destination — just the thing when you are driving to a location for the first time and would like visual confirmation of what it looks like:

Before I forget, here are some additional tech specs: the cockpit is built on the

QNX CAR Platform for Infotainment, uses an interface based on Qt 5.5, integrates iHeartRadio, and runs on a Renesas R-Car H2 system-on-chip.

The acoustics feature that keeps you from shouting The glass cockpit does a great job of keeping your eyes focused straight ahead. But what’s the use of that if, as a driver, you have to turn your head every time you want to speak to someone in the back seat? If you’ve ever struggled to hold a conversation in a car at highway speeds, especially in a larger vehicle, you know what I’m talking about.

QNX acoustics to the rescue! Earlier today, QNX Software Systems announced the

QNX Acoustics Management Platform, a new solution that replaces the traditional piecemeal approach to in-car acoustics with a holistic model that enables faster-time-to-production and lower system costs. The platform comes with several innovative features, including QNX In-Car Communication (ICC) technology, which enhances the voice of the driver and relays it to infotainment loudspeakers in the rear of the car.

Long story short: instead of shouting or having to turn around to be heard, the driver can talk normally while keeping his or her eyes on the road. QNX ICC dynamically adapts to noise conditions and adds enhancement only when needed. Better yet, it allows automakers to leverage their existing handsfree telephony microphones and infotainment loudspeakers.

The reference vehicle that keeps evolving

The reference vehicle that keeps evolvingBefore you go, I also want to share some updates to the QNX reference vehicle, which is based on a Jeep Wrangler. Like the Highlander, the Jeep got a slick new exterior for CES 2016:

Since 2012, the Jeep has been our go-to vehicle for showcasing the latest capabilities of the QNX CAR Platform for Infotainment. But for over a year now, it has done double-duty as a concept vehicle, showing how QNX technology can help developers build next-generation instrument clusters and ADAS solutions.

Take, for example, the Jeep’s new instrument cluster, which makes its debut this week at CES. In addition to providing all the information that you’d expect, such as speed and RPM, it displays crosswalk notifications, forward collision warnings, speed limit warnings, and turn-by-turn navigation:

The QNX reference vehicle also includes a full-featured head unit that demonstrates the latest out-of-the-box capabilities of the QNX CAR Platform for Infotainment. For example, in this image, the head unit is displaying HERE Auto navigation:

Other features of the platform include:

- A voice interface that uses natural language processing, making it easy to launch applications, play music, select radio stations, control volume, use the navigation system, and perform a variety of other tasks.

- A new, easy-to-navigate UI based on Qt 5.5 that supports a variety of touch gestures, including tap, swipe, pinch, and zoom.

- QNX acoustics technology that enables clear, easy-to-understand hands-free calls through advanced echo cancellation and noise reduction.

- Cellular connectivity provided by the QNX Wireless Framework, which simplifies system design by managing the complexities of modem control on behalf of applications.

- Flexible support for a variety of smartphone integration protocols.

Additional tech specs: The Jeep’s cluster runs on a Qualcomm Snapdragon 602A processor and its user interface was designed by our partner Rightware, using the Rightware Kanzi tool. The head unit, meanwhile, runs on an Intel Atom E3827 processor.

ADAS, augmented reality, V2X, IoT, and moreI have only scratched the surface of what BlackBerry and QNX Software Systems are demonstrating this week at CES 2016. There’s much more to see and experience, including a very cool V2X demonstration, IoT solutions for the automotive and transportation industries, as well as ADAS and augmented reality systems that integrate with the digital clusters described in this post. To learn more,

read the press release that QNX issued today and stay tuned to this channel.